My research tries to bring common sense understanding to robotic perception.

Interacting with the environment requires to perceive objects and understand how actions influence their movement ad shape.

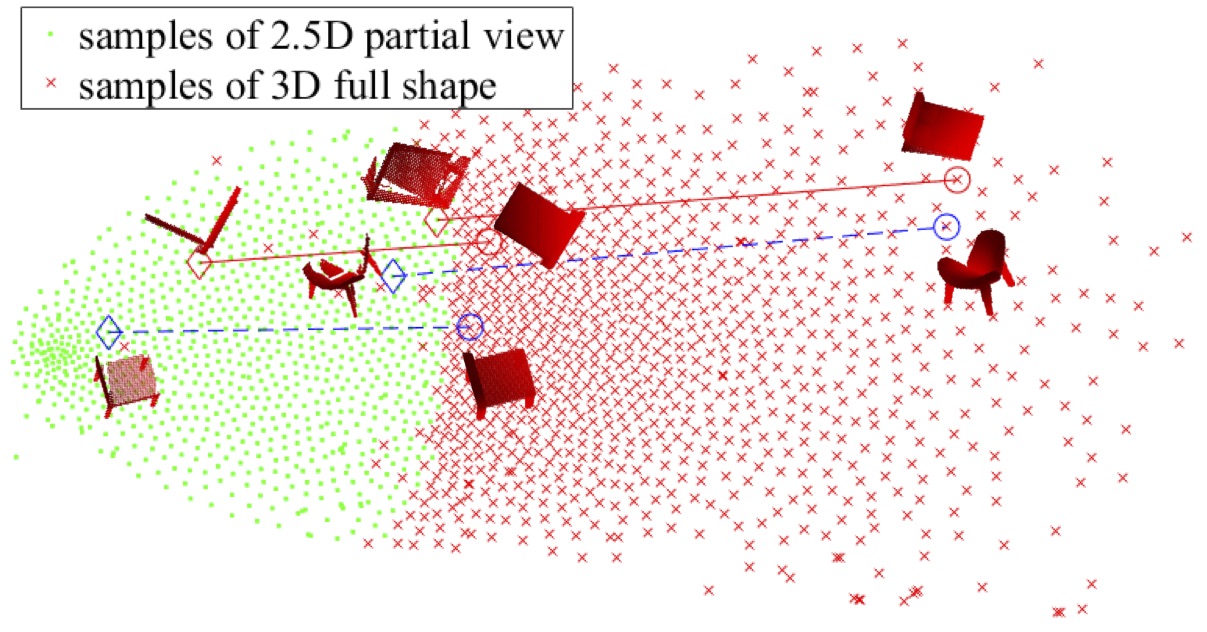

Generative perception models can make sense of partial and noisy observations and reconstruct their shape and semantics

(Hu et al., 2021), (Hu et al., 2020), (Yang et al., 2018),

.

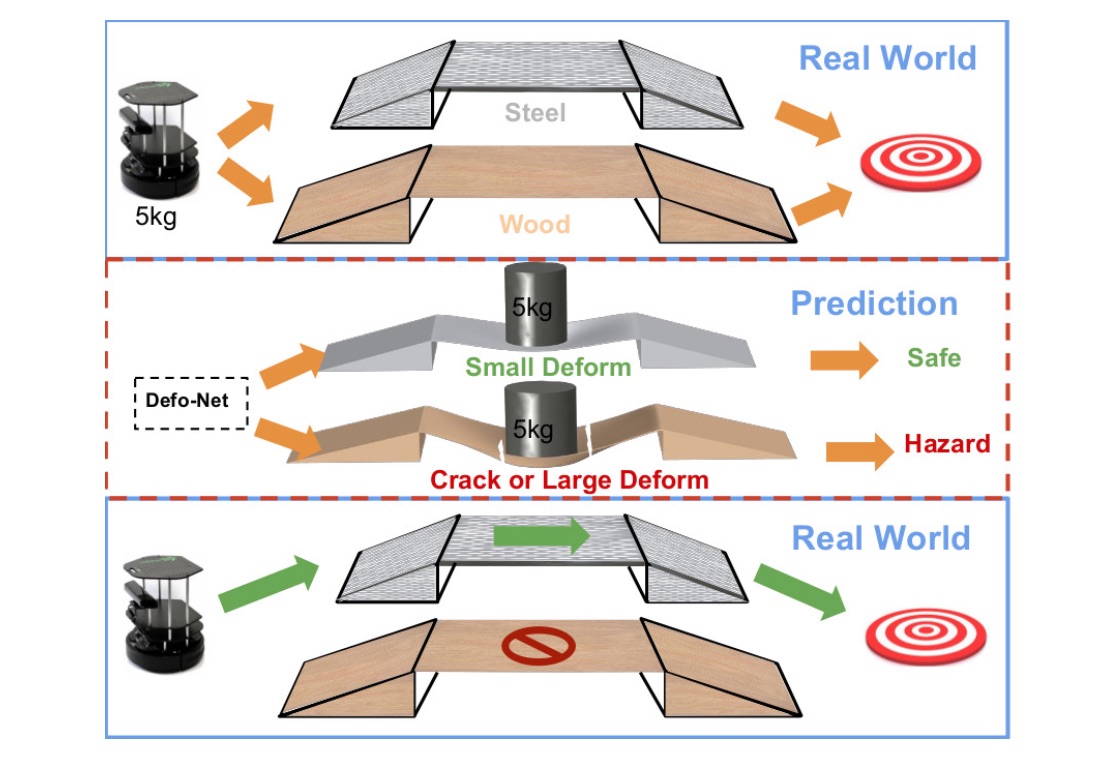

Understanding the intuitive physics of interacting with objects will provide

next-generation automonous agents with a common sense knowledge base for interacting with a complex, dynamic environment

(Wang et al., 2018), (Wang et al., 2018), (Wang et al., 2018)

.

References

2021

-

Learning semantic segmentation of large-scale point clouds with random sampling

Qingyong

Hu, Bo

Yang, Linhai

Xie, and

5 more authors

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021

2020

-

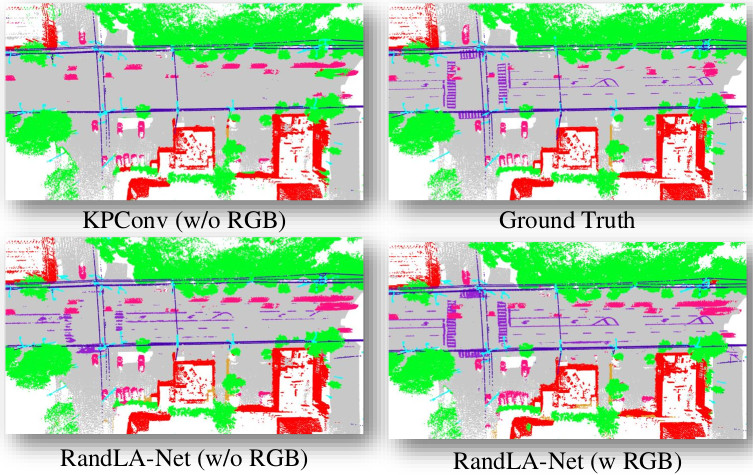

Randla-net: Efficient semantic segmentation of large-scale point clouds

Qingyong

Hu, Bo

Yang, Linhai

Xie, and

5 more authors

In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020

We show that random sampling combined with attention can achieve SOA performances in semantic segmentation while processing large point clouds in near real-time.

2018

-

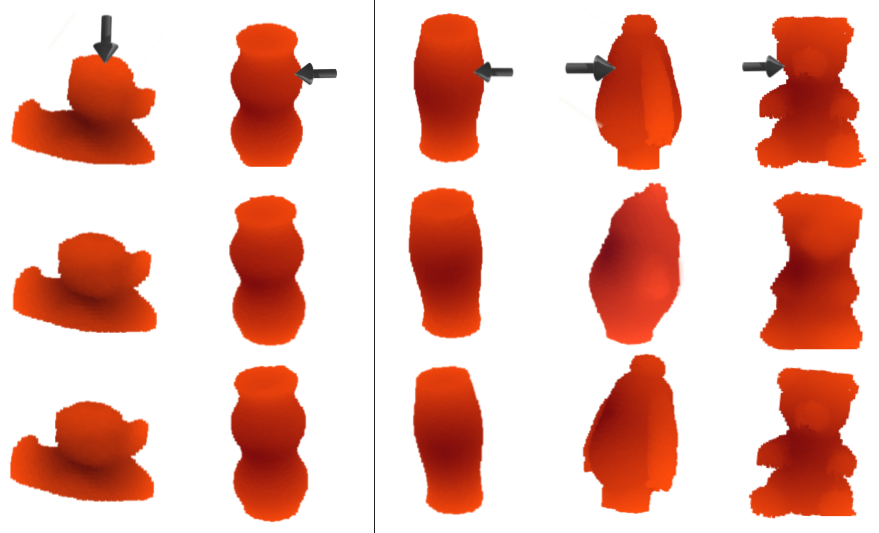

Dense 3D object reconstruction from a single depth view

Bo

Yang, Stefano

Rosa, Andrew

Markham, and

2 more authors

IEEE transactions on pattern analysis and machine intelligence, 2018

We propose an end-to-end approach to high-resolution reconstruction of 3D objects from a single depth image. We also release a real-world dataset for 3D reconstruction. We argue that real-world benchmarks for shape reconstruction are necessary for a thorough validation of future approaches.

-

Learning the intuitive physics of non-rigid object deformations

Zhihua

Wang, Stefano

Rosa, and Andrew

Markham

In Neural Information Processing Systems (NIPS) Workshops, 2018

-

3d-physnet: Learning the intuitive physics of non-rigid object deformations

Zhihua

Wang, Stefano

Rosa, Bo

Yang, and

3 more authors

In Proceedings of the 27th International Joint Conference on Artificial Intelligence, Stockholm, SWE, 2018

We show that conditioning a generative model that predicts soft object deformations on real physical properties can improve prediction accuracy as well as enabling generalisation abilities.

-

Defo-Net: Learning body deformation using generative adversarial networks

Zhihua

Wang, Stefano

Rosa, Linhai

Xie, and

4 more authors

In 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018

We show that conditioning a generative model that predicts soft object deformations on real physical properties can improve prediction accuracy as well as enabling generalisation abilities.

Learning semantic segmentation of large-scale point clouds with random samplingIEEE Transactions on Pattern Analysis and Machine Intelligence, 2021

Learning semantic segmentation of large-scale point clouds with random samplingIEEE Transactions on Pattern Analysis and Machine Intelligence, 2021 Learning the intuitive physics of non-rigid object deformationsIn Neural Information Processing Systems (NIPS) Workshops, 2018

Learning the intuitive physics of non-rigid object deformationsIn Neural Information Processing Systems (NIPS) Workshops, 2018