I work on using human experiences to improve semantic understanding of the environment for mobile robots

(Rosa et al., 2018),(Rosa et al., 2018)

.

I study interpretable ways to learn sensor fusion strategies in deep VIO frameworks, for integrating novel sensor modalities such as

millimeter wave radar and thermal imaging into a unified framework for first responders

(Chen et al., 2022), (Saputra et al., 2020), (Lu et al., 2020), (Chen et al., 2019)

.

References

2022

-

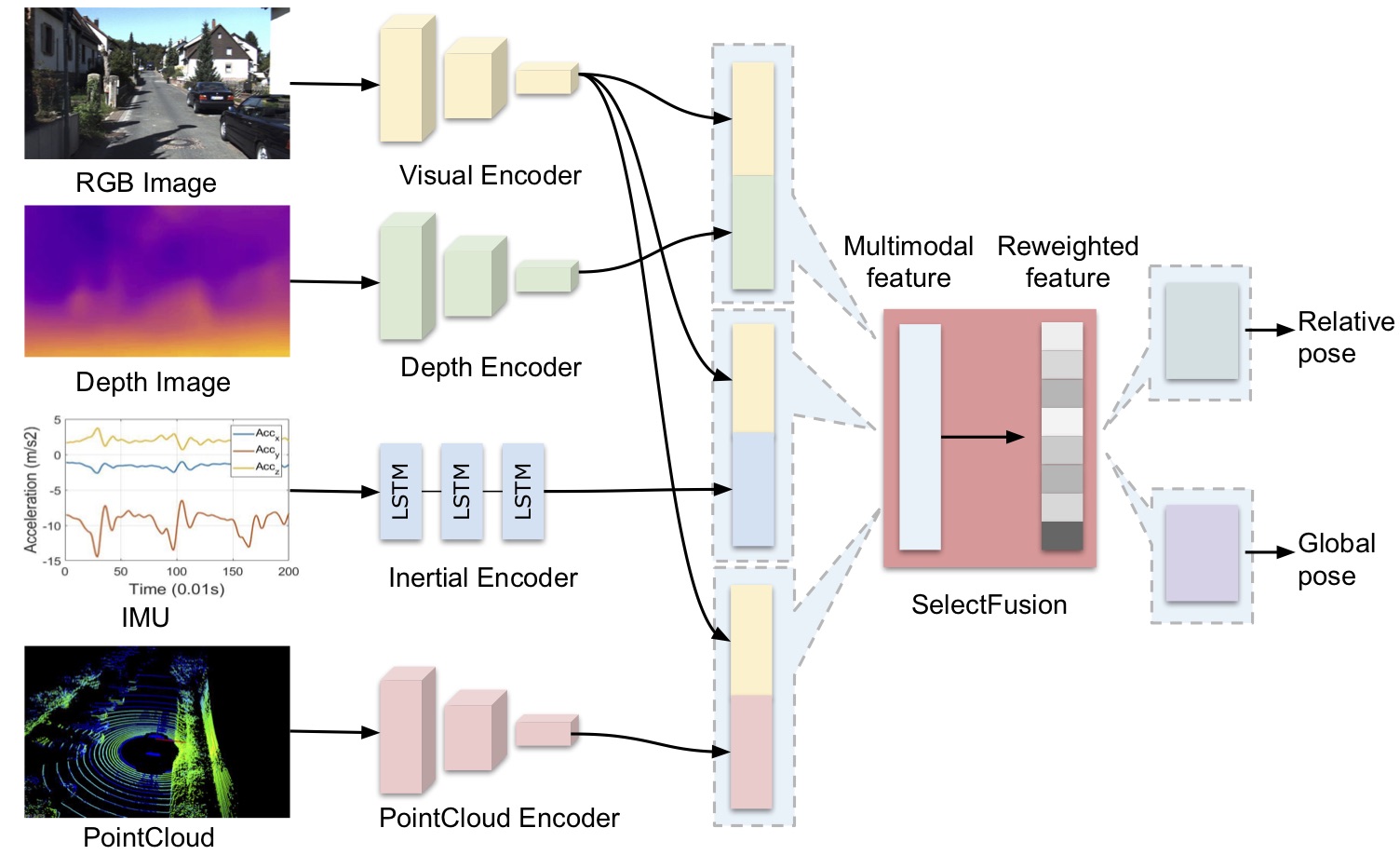

Learning selective sensor fusion for state estimation

Changhao

Chen, Stefano

Rosa, Chris Xiaoxuan

Lu, and

3 more authors

IEEE Transactions on Neural Networks and Learning Systems, 2022

we propose an end-to-end selective sensor fusion module that can be applied to modality pairs, such as monocular images and inertial measurements, depth images, and light detection and ranging (LIDAR) point clouds.

2020

-

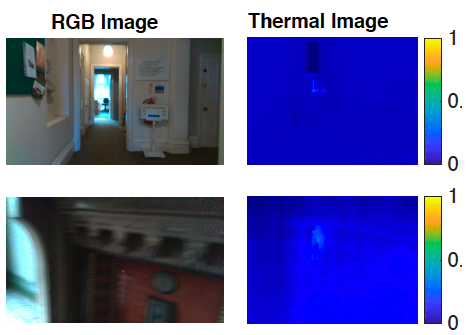

Deeptio: A deep thermal-inertial odometry with visual hallucination

Muhamad Risqi U

Saputra, Pedro PB

De Gusmao, Chris Xiaoxuan

Lu, and

7 more authors

IEEE Robotics and Automation Letters, 2020

We learn to hallucinate visual features from thermal images that can help first responders to navigate visually-denied scenarios.

-

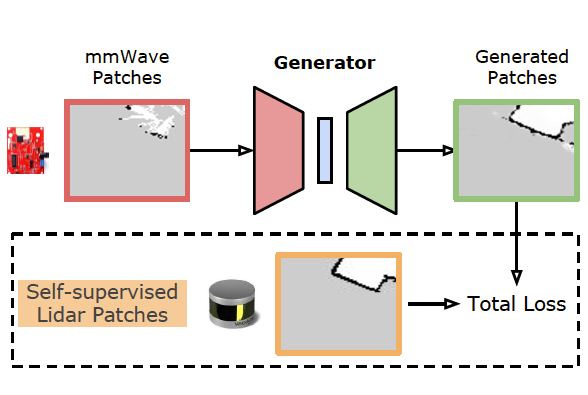

See through smoke: robust indoor mapping with low-cost mmwave radar

Chris Xiaoxuan

Lu, Stefano

Rosa, Peijun

Zhao, and

5 more authors

In Proceedings of the 18th International Conference on Mobile Systems, Applications, and Services, 2020

We show how to build dense occupancy grid maps of indoor environments from sparse, noisy mmWave measurements, with cross-modal training.

2019

-

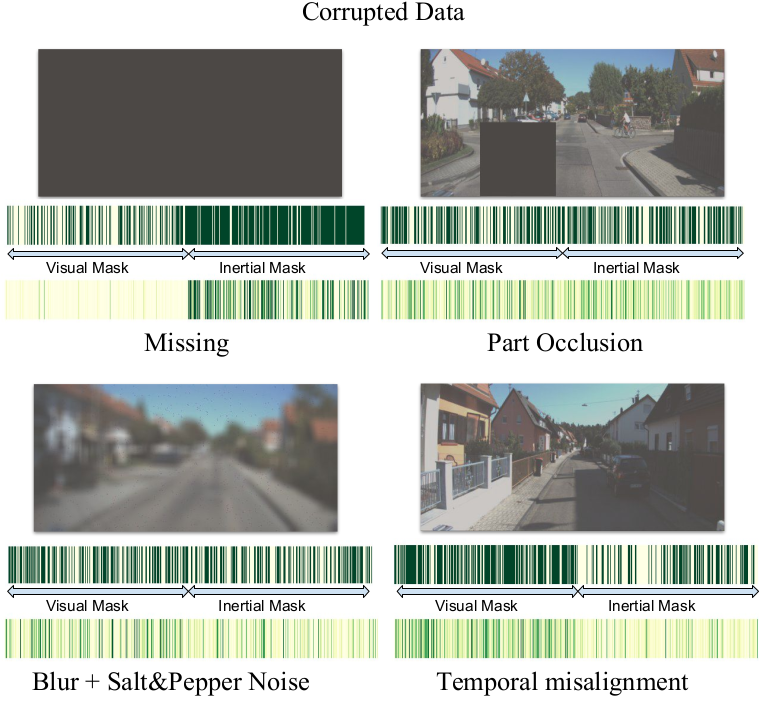

Selective sensor fusion for neural visual-inertial odometry

Changhao

Chen, Stefano

Rosa, Yishu

Miao, and

4 more authors

In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019

We show how data-learned sensor fusion strategies can improve accuracy and robustness in deep VIO when dealing with noisy/corrupted data, while adding interpretability.

2018

-

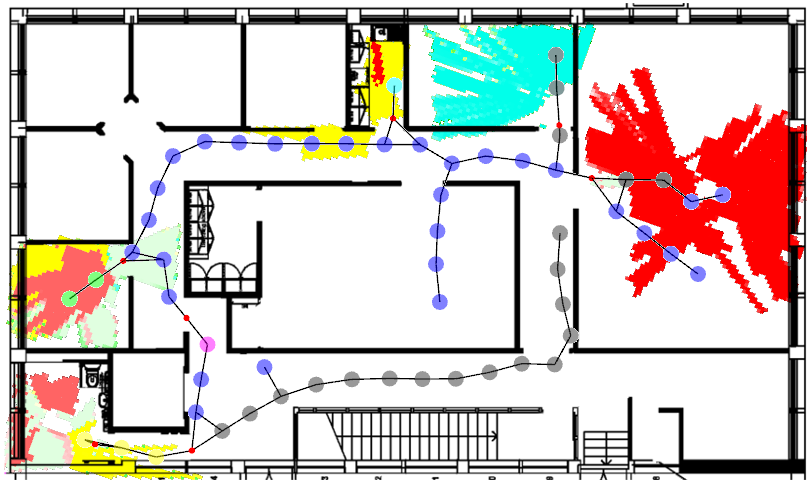

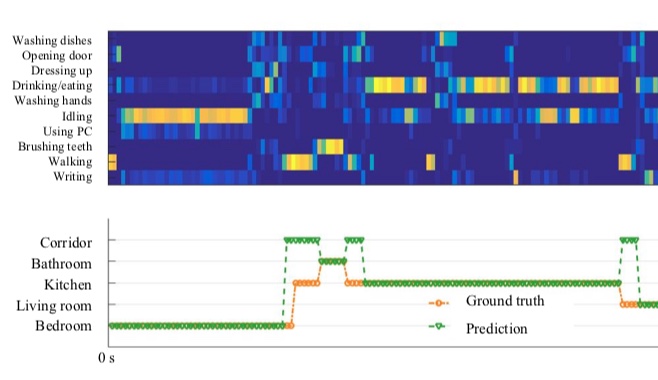

Semantic place understanding for human–robot coexistence—toward intelligent workplaces

Stefano

Rosa, Andrea

Patane, Chris Xiaoxuan

Lu, and

1 more author

IEEE Transactions on Human-Machine Systems, 2018

Robots and users can work synergistically by mutually learning over time, and benefitting from each other by exploiting each other’s strengths. We show how detecting user activities can help robots to learn semantic understanding of the environment, while at the same time learning to better localise the user.

-

CommonSense: Collaborative learning of scene semantics by robots and humans

Stefano

Rosa, Andrea

Patanè, Xiaoxuan

Lu, and

1 more author

In Proceedings of the 1st International Workshop on Internet of People, Assistive Robots and Things, 2018

CommonSense: Collaborative learning of scene semantics by robots and humansIn Proceedings of the 1st International Workshop on Internet of People, Assistive Robots and Things, 2018

CommonSense: Collaborative learning of scene semantics by robots and humansIn Proceedings of the 1st International Workshop on Internet of People, Assistive Robots and Things, 2018